I try not to market my own company too much on my blog, but sometimes I get to do something cool and I feel compelled to share. In a recent round of UAV test flights (which we’re working on turning into some papers upcoming), the Keystone Aerial Surveys crew decided on a whim to fly around these old trucks that were near the test field with our DJI Inspire 1 lofting a Zenmuse X5 and a SteadiDrone Mavrik X8 with a Sony a7r.

I’m here to tell you how we turned those pictures into a super cool and useful dataset.

The Flight

This was a small side test to get some of the newer UAV pilots comfortable with the system and platform, but in the process they ended up with a great survey flight.

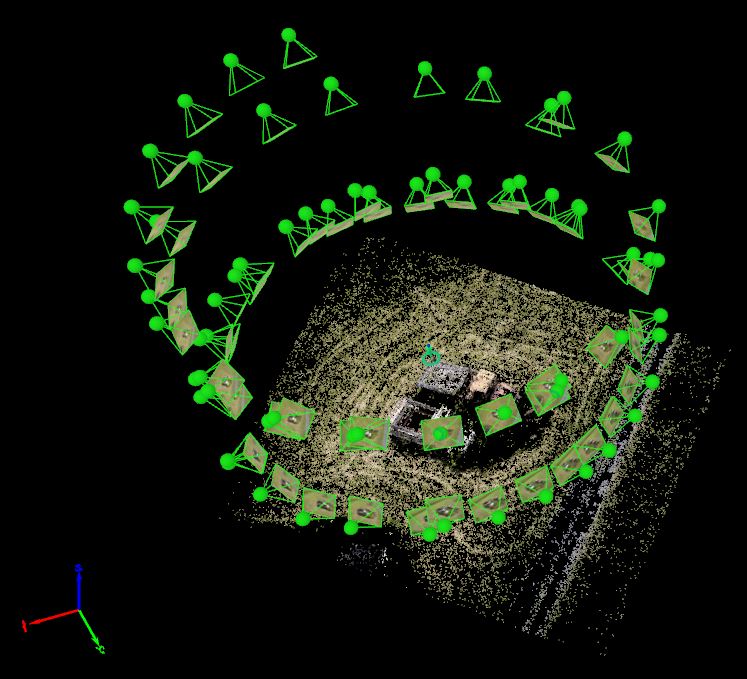

The crew set the autopilot for quick orbits around the trucks’ estimated centerpoint. Many flight controllers on modern UAS are fully integrated with the GPS system on the airframe, so fixing a GPS point and telling the platform to orbit comes down to simple geometry processed by the UAS itself. On a map, this flight ends up looking like a tiny little circle.

In total, 71 images were captured at two target altitudes: 106 meters and 116 meters above the WGS84 ellipsoid–or to put this into human terms, about 10 meters and 20 meters above the ground. The platform captured the GPS coordinates of each photocenter, which assists in post processing.

Post Flight Setup

Given that this data was collected from a very well ground-controlled test field, I pulled ‘photo identifiable points‘ (PIDs) on the trucks from our existing surveys to better control the geolocation of the model. Using Pix4D Mapper Pro, I used the collected imagery and my “fake” ground control points as input and did a tie point collection–a computer vision process that matches similar points in overlapping imagery to identify common ground/feature points. This is the first step in the Aerial Triangulation process.

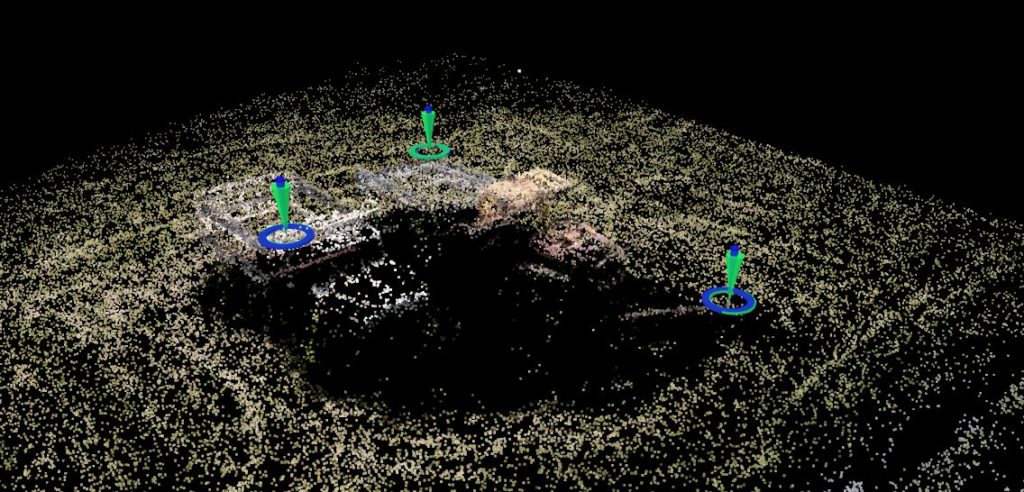

Here, you can see the ground control markers (three of them) and a basic skeleton of a point cloud making up the truck model. This process also adjusts the exterior orientation (EO) parameters, which tell us where the photo was taken and what orientation the camera was at in the world to a very high precision. It also adjusts to interior orientation parameters, which refine the assumptions of the camera’s focal length, sensor distortion and lens distortion, enabling us to make good measurements from the photos themselves. These are all essential processes in photogrammetry.

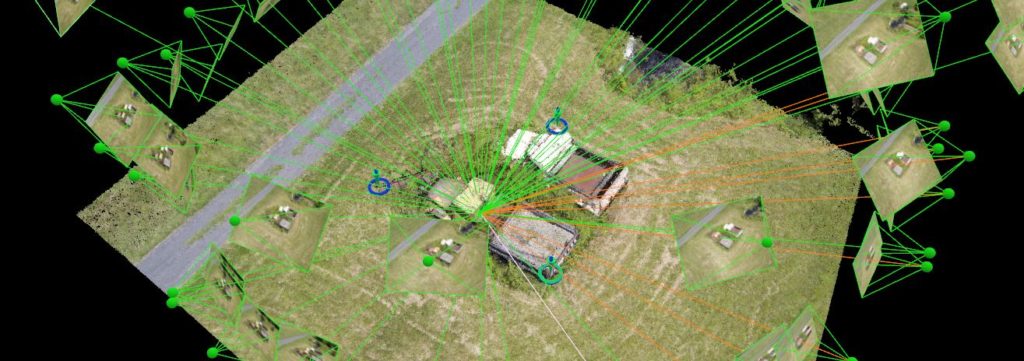

With accurate locations and orientations, Pix4D reconstructs the flight.

We can also look at the accuracy estimations of the model to the ground control. Below is an overview of the Root Mean Square Error (RMSE) values of the aerial triangulation in real world measurements.

| Model Error Values (meters) | |

|---|---|

| RMSE X | 0.012297 |

| RMSE Y | 0.015486 |

| RMSE Z | 0.002721 |

We can see here that all of the RMSE values are very small, implying that the model is highly accurate. If doing real survey work, I would highly recommend using blind check points to test accuracy, but since this is just a fun model I’m pretty happy with less than 2 cm horizontally and less than 3 millimeters vertically. Odds are that these values are ridiculous because that’s within the margin of error of the GPS and sensor calibration, but that’s a story for another time.

Densification

With the cameras properly calibrated, another matching process is run. This time, the objective is to fill in the gaps between tie points with data. This calls for an attempted pixel-to-pixel matching with every pixel in the photo. This allows for a dense reconstruction of the subject in three dimensions. Every match is given a X, Y and Z coordinate, as well as the RGB color values of that pixel, and the result is a point cloud. From this, we can derive products such as digital surface models (which can then become digital elevation models, digital terrain models, etc.) and photogrammetric meshes. In a mapping project, this would also serve as the basis for orthorectification.

The dense point cloud here looks pretty great.

The Mesh

Photogrammetric meshes have become more popular in recent years as everything from game consoles to phones to web browsers have moved into the 3D realm. Photogrammetric models are used all over; mapping, archaeology, video game design, and 3D printing come to mind. A mesh is similar to what GIS people know as a TIN (Triangulated Irregular Network). The dense point cloud is filtered to extract the ‘best,’ most reliable points that make up the structure of the model. These points are then triangulated to produce a solid surface. Finally, because the software knows the parameters of the camera, it can project the texture of the object onto the generated surface, creating a photo-realistic model. It differs from a point cloud in that it is really a solid object

Just look at these dope trucks. Look at them!

A coworker put together a promotional video showing the steps in this process. I highly recommend checking it out (in 1080p)!

What’s the point?

Glad you asked, and forgive me for the forced pun. As I mentioned, photogrammetric models, both meshes and point clouds, are used in a wide variety of fields. Perhaps the widest and most obvious use of these models is for measurement and survey, and related fields such as forestry, hydrology, geology, GIS, etc. This data can be integrated into nearly any GIS and used in the analytical workflows we’re all accustomed to.

Photogrammetry has been used for over a hundred years for elevation modeling and mapping–now it’s just digital and we can compute millions of matches in minutes rather than running a manual stereo compiler. What’s more is now drones and processing software are becoming more affordable and easier to use. That enables modeling such as this one–a small area-of-interest and high resolution imagery with highly accurate measurements. Need to model a quarry or a lake bed or building? No problem. It should be noted that for larger AOIs, UAS become impractical and extremely expensive, as a crewed aircraft can cover in a few hours what it would take a UAS pilot to do in weeks.

Photogrammetry is becoming more democratized in our industry. That said, I truly believe it to be a specialist field. Anyone can learn it, but it takes a lot of practice and trial and error to build the backbone of knowledge necessary to diagnose when things go wrong and full understand the relationship between sensor models, data and decision making. If you’re interested in learning more about the field of photogrammetry, please look into the American Society of Photogrammetry & Remote Sensing (ASPRS).

nice and very informative blog . good work , really liked the way you’ve put forth things ,if you are looking for short and long term animation course in delhi join tgc india